Frohe Weihnachten, ein schönes Fest, und einen guten Rutsch ins neue Jahr

wünscht euch

Leah Neukirchen

Merry Christmas and a Happy New Year!

NP: Trembling Bells—Willows Of Carbeth

Frohe Weihnachten, ein schönes Fest, und einen guten Rutsch ins neue Jahr

wünscht euch

Leah Neukirchen

Merry Christmas and a Happy New Year!

NP: Trembling Bells—Willows Of Carbeth

In a previous post, I discussed how you can determine that you are pid 1, the init process, when the system is booting. Today, we’ll consider the end of the init process: system shutdown.

If you look into a book on Unix system administration, the classic way to manually turn off a Unix system contains a few steps:

init 1 or shutdown).sync.What step 1 essentially does is is kill all processes (except for pid 1), and spawn a new shell. (Unix doesn’t have a concept of “single-user mode” in kernel space.) This is necessary to orderly stop all daemons, kill all remaining user processes, and close open files that would stop step 2 from progressing.

Step 3 is necessary to ensure the root file system is in a consistent state. Since we cannot unmount it (we still use it!), remounting it read-only is the best available way to ensure consistency.

Finally, we flush buffers, and then it’s safe to turn off the machine.

Now, since this is not my first rodeo with writing custom init scripts, I’ve implemented these steps a bunch of times and found out some things which were not obvious.

So let’s see how some of this works in detail.

This sounds easy, but is tricky to get right. If you are not pid 1,

using kill(-1, SIGTERM) will send SIGTERM to all processes except for

pid 1 and itself

(on Linux on the BSDs). You should then send a SIGCONT to all processes, so

stopped processes will wake up and handle the SIGTERM, too. Then you

usually wait a bit for their graceful shutdown and run kill(-1, SIGKILL)

to kill the rest. Only two processes, you and init, should

remain. The main problem with this is you don’t know when all

processes have exited after the first kill, so there’s necessarily a

delay.

It is therefore better to let pid 1 do the killing and reaping. Again

we run kill(-1, SIGTERM); kill(-1, SIGCONT), and then do the usual

reaping an init process should do. When waitpid fails with ECHILD,

we know there’s no child left over. Else, after some timeout, you fall

back to sending SIGKILL to the rest, and reap again.

(As a historical aside, Alan Cox pointed out that on Unix V7, wait(2) in pid 1 keeps waiting for itself, since the parent of pid 1 is pid 1 itself. However all contemporary systems deal with this fine.)

In the real world, you still want a timeout here. A process could

be stuck in state D and not respond to SIGKILL either. We still

want to power down at some point and not lock up shutdown due to this.

On Linux, you do this by calling mount -o remount,ro /

or the equivalent syscall mount("/", "/", "", MS_REMOUNT | MS_RDONLY, "").

This can fail when the “file system is still in use” with error code

EBUSY.

I ran into this EBUSY error a few times before, and lately a lot during development, and I finally tried to track down why it happens. Usually, it’s caused by some process that still has a file handle open, but at this point of shutdown, there’s nothing running anymore except for our init itself, so how can that fail?

At first I thought it was just some erratic behavior (race condition etc.), but then I realized I could trigger the error each time I updated init (which happens a lot when you are testing code…). However, when I didn’t update init, everything shutdown fine!

Now, I update init in my testing VM like this:

scp leah@10.0.2.2:prj/.../init /bin/init- && mv /bin/init- /bin/init

We can’t overwrite /bin/init directly, else we get ETXTBSY. So we

do the usual dance of atomically renaming the file into the

destination, similar to how package managers do it.

On an inode level, what does this do? Overwriting the /bin/init

file decrements the st_nlink field, usually to 0, which means the

old file is deleted. However, as the init binary is still running (of

course), the inode is kept alive. We can verify this:

# stat -L /proc/1/exe

File: /proc/1/exe

Size: 193032 Blocks: 384 IO Block: 4096 regular file

Device: 8,1 Inode: 137195 Links: 0

Access: (0755/-rwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root)

Access: 2024-12-19 17:29:21.289000000 +0000

Modify: 2024-12-19 17:29:21.302000000 +0000

Change: 2024-12-20 15:18:14.480000000 +0000

Birth: 2024-12-19 17:29:21.289000000 +0000

The link count is zero indeed.

But this causes the file system to stay busy,

since it wants to delete the file when it will be closed, so it cannot

be remounted read-only while there are open file handles to deleted files!

(Thanks to Simon Richter for explaining

this.) This is

also the reason for the occasional shutdown issues I had using

runit—likely the runit binary was updated during the uptime.

I tried many ways to work around this (old posts may suggest we can

perhaps link /proc/1/exe back into a file, but this behavior has been

forbidden in Linux since 2011), but ultimately I think this is a

policy problem and not one of pid 1 itself. I therefore suggest a

simple workaround that users of other init systems can use as well:

in the startup scripts, after the root filesystem is mounted writable,

we just make a backup link for the currently booted init:

ln -f /sbin/init /sbin/.init.old

This ensures that even when /sbin/init is overwritten, its link

count doesn’t drop to zero and we don’t block remounting read-only,

preventing a clean shutdown.

That’s it for now, let’s see what other surprises appear in the future.

NP: Laura Jane Grace—Punk Rock In Basements

For the last few years, I have built a centralized monitoring system based on Prometheus that gathers various metrics across my whole private fleet of servers.

Since writing Prometheus exporters is rather simple, I have written some of them myself:

Additionally I use the following pre-made exporters:

As you can see, this is quite a lot of different exporters running on different hosts.

A few months ago I decided to rebuild the centralized metrics server on top of VictoriaMetrics and with proper access control.

Why VictoriaMetrics? I tried it for a bit and it seems to use less RAM and less storage while supporting long term storage nicely. It also has better mechanisms for importing and exporting data than Prometheus.

Setting up victoria-metrics is very easy. I run it like this:

victoria-metrics -enableTCP6 \

-storageDataPath=/srv/victoria-metrics \

-retentionPeriod=99y \

-httpListenAddr=127.0.0.1:8428 \

-selfScrapeInterval=20s \

-promscrape.config /usr/local/etc/prometheus/prometheus.yaml

Note that you need to enable IPv6 manually all the time.

The prometheus.yaml file is compatible with stock Prometheus.

I then use Grafana to connect to it, using the Prometheus protocol.

I don’t consider most of above metrics to be super private, but they certainly leak metadata (e.g. am I at home or not, how much mail do I get) so I don’t want to publish them on the net accessible to everyone that finds them.

Since Prometheus mainly favors a pull based model, we need to figure out ways to protect the data.

“Obvious” solutions like using mTLS or a maintenance VPN would require reconfiguring many machines and were deemded too much effort.

Essentially, I found three solutions that I will describe in detail:

This is the easiest mechanism, when your host already runs a web server: simply use it as a proxy for the metrics, and filter access by IP address or Basic Auth. Since most webservers have HTTPS today already, you get encryption for free.

A simple nginx configuration to do this would be:

location /metrics {

proxy_http_version 1.1;

proxy_pass http://127.0.0.1:9100/metrics;

access_log off;

allow 127.0.0.1;

allow ...;

deny all;

}

You need to configure the metrics exporter to only listen on localhost.

This is a quite elegant solution that provides encryption, flexible

configuration, and can be used when the scrape target doesn’t have a

public IP address. OpenSSH provides the -R flag to do reverse port

forwarding, but most people don’t know it also can be used to run a

reverse SOCKS proxy!

For this, I create a separate Unix user on scrape target and server, and assign it a SSH key. Then, the target runs:

ssh -o ServerAliveInterval=15 -o ExitOnForwardFailure=yes -R8083 server.example.com -NT

You should run this using service supervision so it tries to reconnect on network failures.

On the server side, you restrict access to only open a port

using /etc/ssh/authorized_keys/scrape-user:

restrict,port-forwarding,permitlisten="8083" ssh-ed25519 ....

Then, the server can use port 8083 as a SOCKS proxy to access the network of the scrape target directly! So you can write a scrape config like:

- job_name: 'nano-exporter-hecate'

proxy_url: 'socks5://127.0.0.1:8083'

static_configs:

- targets: ['127.0.0.1:9100']

labels:

instance: 'hecate.home.vuxu.org:9100'

- targets: ['10.0.0.119:9100']

labels:

instance: 'leto.home.vuxu.org:9100'

Here, we use a host in my home network that is always on, and can also

safely scrape other hosts in the same LAN.

(Note that the IP addresses in targets are resolved relative to the SSH client.)

I used the SSH approach for my notebook as well, but there’s the

problem that we lose data when there’s no Internet connection

available. I have thus moved my notebook to a solution using

vmagent, which is

included with VictoriaMetrics.

vmagent does scrape metrics just like VictoriaMetrics (and also

supports all other metrics protocols, but I don’t use them), but it

simply forwards everything via the Prometheus remote write protocol,

and locally buffers data if it can’t forward the metrics currently.

On the server side, we need to provide access to the remote write

protocol. Since VictoriaMetrics operates without internal access

control, we can use the

vmauth gateway to

implement Basic Auth over TLS. (Again, you can use an existing HTTPS

server and proxy it, but in this case I don’t have a HTTPS server on

the metrics host.)

vmauth needs some configuration. First, we create a self-signed

certificate (Let’s Encrypt support is limited to the commercial

version of VictoriaMetrics unfortunately):

openssl req -x509 -newkey ed25519 \

-keyout /usr/local/etc/vmauth/key.pem \

-out /usr/local/etc/vmauth/cert.pem \

-sha256 -days 3650 -nodes -subj "/CN=server.example.org" \

-addext "subjectAltName = DNS:server.example.org"

I then run it as:

vmauth -enableTCP6 \

-tls \

-tlsCertFile=/usr/local/etc/vmauth/cert.pem \

-tlsKeyFile=/usr/local/etc/vmauth/key.pem \

-reloadAuthKey=secret \

-flagsAuthKey=secret \

-metricsAuthKey=secret \

-pprofAuthKey=secret \

-auth.config=/usr/local/etc/vmauth/vmauth.yaml

(I think it’s unfortunate that we need to add auth-keys now, as internal and forwarded API are exposed on the same port…)

The vmauth.yaml configures who can access:

users:

- username: "client"

password: "evenmoresecret"

url_prefix: "http://localhost:8428/"

Here, localhost:8428 is the VictoriaMetrics instance.

Finally, on the scrape target we can now run vmagent:

vmagent -enableTCP6 \

-promscrape.config=/etc/vmagent/promscrape.yml \

-httpListenAddr=127.0.0.1:8429 \

-remoteWrite.url=https://server.example.org:8427/api/v1/write \

-remoteWrite.label=vmagent=myhostname \

-remoteWrite.retryMinInterval=30s \

-remoteWrite.basicAuth.username=client \

-remoteWrite.basicAuth.passwordFile=/etc/vmagent/passwd \

-remoteWrite.tlsCAFile=/etc/vmagent/cert.pem

The cert.pem is copied from the server, the password is stored in /etc/vmagent/passwd.

Note that the vmagent instance is configured locally, so we can again

scrape targets that are only reachable from it. We also can adjust

the scrape targets without having to touch the metrics server itself.

NP: Godspeed You! Black Emperor—Broken Spires At Dead Kapital

A few months ago I found an article on how to organize your training plan using logic programming, which used Haskell to implement a logic language.

At first, I thought this is a good problem to solve in Prolog, but

there’s a difficulty: Prolog makes it quite hard to specify models

that have multiple possible outcomes (yes, you can work with backtracking, but

it gets tricky when you start to combine multiple predicates or fiddle

around with bagof).

An alternative to classic Prolog is the concept of Answer Set Programming, based on the Stable Model Semantics by Gelfond and Lifschitz (1988). Here, the idea is that the logical formulas specify models, and the goal of Answer Set Programming is to compute the Answer Sets, i.e. a set of models fulfilling the formulas.

I can’t do a full introduction to Answer Set Programming here, but I can recommend the overview article Answer set programming at a glance by Brewka et. al., as well as the short article What Is Answer Set Programming? and the book Answer Set Programming by Lifschitz.

Essentially, we can think of a stable model as a maximal set of atoms that are true (derivable from the rules) without being in conflict to other propositions. If we don’t use negation, that pretty boring

However, consider this logic program:

q :- not p.

p :- not q.

Contrary to what you may expect, it has two models: {p} and {q}. This is because ASP uses default negation, which means “not A” is assumed to hold unless A is derived. Both cannot be true at the same time, but likewise the empty set is not a model because it can be extended to {p} or {q}.

The program p :- not not p. has two models, {} and {p}.

Let’s do a small problem first so we get a feel for the tooling. I chose to use clingo, which is the most advanced open source implementation of Answer Set Programming.

If your distribution doesn’t include it, you can run it from Nix:

nix shell nixpkgs#clingo

Let’s model a directed graph with four nodes:

edge(a,b).

edge(a,c).

edge(b,d).

edge(c,d).

Our problem is to find paths from a to d. We can define a

relation step, which either means “we can step from X to d”

or “we can step from X to Y when there’s an edge from X to Y and

another step from Y”:

0 { step(X,E) } 1 :- edge(X,E), E = d.

0 { step(X,Y) } 1 :- edge(X,Y), step(Y,_).

The 0 { ... } 1 decoration means that each step can be taken at most once.

Finally, we need to specify our goal, which in tradition of logic programming, is written as a negation:

:- not step(a,_).

#show step/2.

This means “it is not the case that there’s no step starting from a”.

The #show instructions limits clingo to only output the binary

step relation. Let’s run it:

% gringo graph.pl | clasp

clasp version 3.3.10

Reading from stdin

Solving...

Answer: 1

step(b,d) step(a,b)

SATISFIABLE

Models : 1+

Calls : 1

Time : 0.001s (Solving: 0.00s 1st Model: 0.00s Unsat: 0.00s)

CPU Time : 0.000s

Clingo has found a solution. We can go from a to b and from b to d.

We can also ask for all solutions, by passing -n0:

% gringo graph.pl | clasp -n0

clasp version 3.3.10

Reading from stdin

Solving...

Answer: 1

step(b,d) step(a,b)

Answer: 2

step(c,d) step(b,d) step(a,b)

Answer: 3

step(c,d) step(a,c)

Answer: 4

step(c,d) step(b,d) step(a,c)

Answer: 5

step(c,d) step(b,d) step(a,b) step(a,c)

SATISFIABLE

Models : 5

Calls : 1

Time : 0.000s (Solving: 0.00s 1st Model: 0.00s Unsat: 0.00s)

CPU Time : 0.000s

Here we see there are five possible models of this system (but every model except the first and the third has superflous steps).

To see why the 0 { ... } 1 matters, here’s what happens without it:

Answer: 1

step(b,d) step(c,d) step(a,b) step(a,c)

SATISFIABLE

Now there is only one model, but it contains all paths. Cardinality bounds are implemented using negation internally.

Back to the original task: Planning Weekly Workouts in 100 lines of Haskell.

The goal is to create a weekly plan of training exercises according to some specific rules.

First, we need some definitions related to weekdays:

weekday(1..7).

n_weekday(D, DD) :- weekday(D), weekday(DD), (D+1) \ 7 == DD \ 7.

nn_weekday(D, DD) :- weekday(D), weekday(DD), (D+2) \ 7 == DD \ 7.

two_weekday(D, DD) :- n_weekday(D, DD).

two_weekday(D, DD) :- nn_weekday(D, DD).

two_weekday(D, DD) :- two_weekday(DD, D).

This is a bit more complicated because we later need “day after”

and “within two days”. (\ means modulo.)

Now, let’s define workout and running exercises:

workout(push; pull; leg; none).

running(long; short; none).

To the plan: each weekday has one workout and one running exercise:

{ plan(D,W,R) : workout(W), running(R) } = 1 :- weekday(D).

We then add the constraints:

% No running on leg day

plan(D, leg, none) :- plan(D, leg, _).

% Short intervals run is after an outdoor pull/push workout

:- plan(_, none, short).

% Workout on Monday outdoors always, not legs

:- plan(1, none, _).

:- plan(1, leg, _).

% Pull day during the week?

:- plan(6..7, pull, _).

% One long run, one short run

{ plan(D,W,long) : weekday(D), workout(W) } = 1.

{ plan(D,W,short) : weekday(D), workout(W) } = 1.

% Two push, two pull, two leg

:- not { plan(D,push,R) } = 2.

:- not { plan(D,pull,R) } = 2.

:- not { plan(D,leg,R) } = 2.

% Long run on weekend

{ plan(6..7,W,long) : workout(W) } = 1.

% Run spaced out at least 2 days

:- plan(D,_,short), plan(DD,_,long), two_weekday(D, DD).

% Space out workouts at least 2 days

:- plan(D,W,_), plan(DD,W,_), W != none, two_weekday(D, DD).

% No leg day before short run

% No leg day before a long run

{ plan(D,W,R) : running(R), R != none, workout(W), plan(DD,leg,_), n_weekday(D, DD) } = 1.

#show plan/3.

clingo generates the same three plans as the Haskell program:

Solving...

Answer: 1

plan(5,leg,none) plan(2,leg,none) plan(1,pull,none) plan(3,push,none) plan(4,pull,short) plan(6,push,none) plan(7,none,long)

Answer: 2

plan(5,leg,none) plan(2,leg,none) plan(1,pull,none) plan(3,push,none) plan(4,pull,short) plan(6,none,none) plan(7,push,long)

Answer: 3

plan(7,leg,none) plan(4,leg,none) plan(1,pull,none) plan(2,push,short) plan(3,none,none) plan(5,pull,none) plan(6,push,long)

SATISFIABLE

The next problem I solved using ASP was a silly exercise from a statistics book (Lehn, Wegmann, Rettig: Aufgabensammlung zur Einführung in die Statistik), translation mine:

Exercise 11c) Ten French married couples bid goodbye to each other: the men with the men by shaking hands, the women with the women by kisses on both cheeks, and women with the men likewise by kisses on both cheeks. How many kisses and how many handshakes take place?

The implicit assumption of the exercise is that all married couples consist of a man and a woman, but it’s more fun to solve it in a generic way. So let’s do that using ASP.

First, we model the people and their fixed binary gender (more assumptions, but else the exercise is truly underspecified):

person(0..19).

gender(man; woman).

{ gender(P, G) : gender(G) } = 1 :- person(P).

I decided to model a couple using an even and odd numbered person:

couple(A, B) :- person(A), person(B), A != B, A/2 == B/2.

Next, we model the handshakes. Two people shake hands if they are not part of the same couple, and both are men:

handshake(A, B) :- person(A), person(B), A < B, not couple(A, B), gender(A, man), gender(B, man).

The A < B ensures we only count one handshake between two men,

as handshaking is a symmetric act.

We also count the handshakes:

handshakes(N) :- N = #count { handshakes(A, B) : handshake(A, B) }.

Likewise, two kisses happen between two persons not in the same couple where one is a woman. Note that kisses are asymmetric since they go from mouth to cheek (this cost me an hour of debugging…):

kiss(A, B) :- person(A), person(B), not A == B, not couple(A, B), gender(A, woman).

kiss(A, B) :- person(A), person(B), not A == B, not couple(A, B), gender(B, woman).

kisses(N) :- H = #count { kisses(A, B) : kiss(A, B) }, N = H * 2.

Finally, we also count men and women for reporting purposes:

men(N) :- N = #count { g(P) : gender(P, man) }.

women(N) :- N = #count { g(P) : gender(P, woman) }.

#show handshakes/1.

#show kisses/1.

#show men/1.

#show women/1.

Thanks to clingo’s parallelization support, we can compute all possible 220 solutions very quickly:

Solving...

Answer: 1

women(0) men(20) kisses(0) handshakes(180)

Answer: 2

women(1) men(19) kisses(72) handshakes(162)

Answer: 3

women(1) men(19) kisses(72) handshakes(162)

...

Answer: 1048576

women(12) men(8) kisses(616) handshakes(26)

SATISFIABLE

Models : 1048576

Calls : 1

Time : 51.163s (Solving: 51.00s 1st Model: 0.10s Unsat: 0.01s)

CPU Time : 463.811s

Threads : 16 (Winner: 0)

We can also specialize the model to have only hetero couples:

:- Z = 0..9, not gender(2*Z, man).

:- Z = 0..9, not gender(2*Z+1, woman).

Then we get the unique solution:

Solving...

Answer: 1

women(10) men(10) kisses(540) handshakes(45)

SATISFIABLE

I hope this post could interest you in how Answer Set Programming can be used. Some more interesting programs can be found on Hakan Kjellerstrand’s blog.

Thanks to the xz backdoor, many people are now talking about the state of Linux packaging tools, and in particular build systems. As a maintainer of Void Linux and packager of many things, I have my five cents to add, so today I’ll be the contrarian and argue what autoconf got right. This is not an apology for GNU autotools; we are all well familiar with the issues they bring—yet some prospective replacements manage to be worse in certain aspects.

This is of course the hardest point to tackle for any new contestor that has not reached a critical mass.

In Void Linux, the GNU configure build style is the most popular; roughly 2250 of about 14300 package template use it, and an additional 120 use the generic configure build style, which works similarily.

As a packager, the worst thing is to find a custom made build system

that behaves totally different from what we know—if you decide to

write your own ./configure scripts, please stick to the conventions!

We packagers really have better things to do than figure out yet another

homebrew build system that’s used exactly once.

These conventions are standardized as part of the GNU Coding

Standards

and they specify many features that packagers expect, but developers

without own packaging experience are likely to miss. One example

is support for staged installation, i.e. DESTDIR. This is essential

for building packages that only contain the files that package

actually ships. And no, support for --prefix is not enough to

make up for this (if you wonder why, please read up the standards).

People who have been staring at ./configure output for too long may

want to disagree, but let me make my point: check-based configuration

is the only way to write software that will continue to work properly

in the future. If you instead keep a table of broken systems and

workarounds, it a) will not be updated for future systems, b) doesn’t

detect if the system was actually fixed (either by patching a bug, or

adding a missing feature). It’s also very unlikely the software

builds on an system unknown to the build system, even if it’s

standards-compliant otherwise.

Of course, the checks should be reasonable (and in practice, often are

excessive). If your code assumes a C99 environment, you don’t need to

check whether all C99 functions you use are available. Likewise, if

you don’t need macros for certain sizeof values, you don’t need to

check for them, either. And you never need to check if sizeof char

is actually 1—it literally can’t be anything else. Also, checking

for functions can be done incorrectly.

While checks are good, sometimes they are broken or a certain

configuration needs special override, because a feature can’t be

checked (for example, when cross-compiling). In this case, autoconf

scripts provide options to override checks with a predetermined

result; usually you can set an environment variable like

gt_cv_func_printf_posix=yes.

Likewise, if a library is installed at a special location, it’s also

easy to tell configure to use it.

Many other systems do checks, but only tell that something has failed.

Debugging this can be difficult. Autoconf writes what it does into a

config.log file, which is sometimes helpful to debug a check.

Cross-compilation is a build system feature that is often put in

second place, but as a maintainer of a system that heavily makes use

of it, I have a fair share of experience and can say that autotools

are one of the best systems to support cross-compilation. Especially

custom-made build systems are often very lacking. Cross-compilation

of C programs is not particularly hard in principle, but your build

system needs to know which code is going to run on the target, and

that programs which need to run during compilation (e.g. to precompute

tables or something) need to be compiled for the host (with different

CFLAGS and so on).

This is also a defining feature of autoconf, as usually a basic POSIX shell environment (or, say, something busybox) is enough to run the configure scripts. This is in particular important for packages needed for bootstrapping. If your build system needs Python, well, then you need to compile Python first; but to compile Python, you need to compile all of its dependencies, which hopefully don’t need Python then themselves to build…

However, for packages not directly relevant to bootstrapping a system this is not such an essential feature.

NP: Policy of 3—Let It Build

It is well known that a Scheme function definition is merely defining a lambda function to a variable:

(define (hypot a b)

(sqrt (+ (* a a) (* b b))))

This function could be written just as well:

(define hypot

(lambda (a b)

(sqrt (+ (* a a) (* b b)))))

Occasionally, we need this explicit style when a function needs to keep around some internal state:

(define generate-id

(let ((i 0))

(lambda ()

(set! i (+ i 1))

i)))

(generate-id) ;=> 1

(generate-id) ;=> 2

(generate-id) ;=> 3

A problem arises when we need multiple functions to share the state,

let’s say we also want a reset-id function:

(define i 0)

(define (generate-id)

(set! i (+ i 1))

i)

(define (reset-id)

(set! i 0))

(generate-id) ;=> 1

(generate-id) ;=> 2

(generate-id) ;=> 3

(reset-id)

(generate-id) ;=> 1

(generate-id) ;=> 2

Here, I had to make i a global variable to allow two toplevel

functions to access it. This is ugly, of course. When you did deeper

into the scheme specs, you may find define-values, which lets us write:

(define-values (generate-id reset-id)

(let ((i 0))

(values

(lambda ()

(set! i (+ i 1))

i)

(lambda ()

(set! i 0)))))

This hides i successfully from the outer world, but at what cost.

The programming language Standard ML has a nice feature to write this

idiomatically, namely local:

local

val i = ref 0

in

fun generate_id() = (i := !i + 1; !i)

fun reset_id() = i := 0

end

Here, the binding of i is not visible to the outer world as well.

It would be nice to have this in Scheme too, I thought.

Racket provides it,

but the implementation is quite hairy.

Since I mostly use Gerbil Scheme these days, I

thought perhaps I can reuse the module system. In Gerbil,

we could also write:

(module ids

(export generate-id reset-id)

(def i 0)

(def (generate-id)

(set! i (+ i 1))

i)

(def (reset-id)

(set! i 0)))

It is used like this:

(import ids)

(generate-id) ;=> 1

(generate-id) ;=> 2

(generate-id) ;=> 3

(reset-id)

(generate-id) ;=> 1

(generate-id) ;=> 2

So, let’s use the module system of Gerbil (which supports nested

modules) to implement local. As a first step, we need to write a

macro to define a new module and immediately import it. Since all

Gerbil modules need to have a name, we’ll make up new names using

gensym:

(import :std/sugar)

(defrule (local-module body ...)

(with-id ((mod (gensym 'mod)))

(begin

(module mod

body ...)

(import mod))))

Here, we use the with-id macro to transform mod into a freshly

generated symbol. defrule is just a shortcut for regular Scheme

syntax-rules, which guarantees a hygienic macro. We can use the

identifier mod inside the body without problems:

> (local-module (export mod) (def (mod) 42))

> (mod)

42

> mod

#<procedure #27 mod378#mod>

The internal representation of the function shows us it was defined in

a generated module. Re-running local-module verifies that fresh

modules are generated.

Now to the second step. We want a macro that takes local bindings and

a body and only exports the definitions of the body. Using the Gerbil

export modifier except-out, we can export everything but the local

bindings; this is good enough for us.

Thanks to the power of syntax-rules, this is now straight forward to

state:

(defrule (local ((var val) ...) body ...)

(local-module

(export (except-out #t var ...))

(def var val) ...

body ...))

We rewrite all let-style binding pairs into definitions as well, but we make sure not to export their names.

Now, we can write our running example like this:

(local ((i 0))

(def (generate-id)

(set! i (+ i 1))

i)

(def (reset-id)

(set! i 0)))

(generate-id) ;=> 1

(generate-id) ;=> 2

(generate-id) ;=> 3

(reset-id)

(generate-id) ;=> 1

(generate-id) ;=> 2

But perhaps you prefer the slightly less magical way of using modules directly (which is also what many recommend to do in Standard ML). Still, creating modules by macros for fine-grained scoping is a good trick to have up your sleeve.

[Addendum 2024-01-28:] Drew Crampsie showed me another trick with

nested modules that will help improve the local macro: when you

import a module into another, the bindings are not re-exported by

(export #t) (which means “export all defined identifiers”). (You

can use (export (import: module)) if you really wanted this.)

This means we don’t need the except-out trick, but we can just use

two nested modules instead:

(defrule (local ((var val) ...) body ...)

(local-module

(local-module

(export #t)

(def var val) ...)

(export #t)

body ...))

The inner module contains all the local bindings, the outer one the visible bindings made in the body. Now, definitions in the body can also shadow local bindings (not that this is very useful):

(local ((var 5))

(def (get)

var)

(def var 6))

(get) ;=> 6

NP: Dead Moon—Play With Fire

Frohe Weihnachten, ein schönes Fest, und einen guten Rutsch ins neue Jahr

wünscht euch

Leah Neukirchen

Merry Christmas and a Happy New Year!

NP: ANOHNI and the Johnsons—You Be Free

I drafted this article in Feburary for a blog project that never came to realization, but as it’s a nice little article so I’ll just post it here:

I was thoroughly nerdsniped by a recent post on The Unix Heritage Society mailing list, where this anecdote was mentioned by Noel Chiappa:

Steve Ward told another oral story which I’m pretty sure is true, though. They ask the candidate to design a state machine (or digital logic, I forget which) which can tell if a number is divisible by three (I think I have the details correct, but I’m not absolutely certain). So they describe one – and then point out that you can feed the number in from either end (most or least significant end first) – and proves that it will work either way! The committee was blown away.

Since this sounded like an interesting statement, I tried to find a proof myself and found two solutions, one using automata theory and one using ordinary math.

Read as a PDF: On division by three.

NP: Lightning Bolt—No Rest for the Obsessed

Ein Entwurfsmuster für kollektiv verwaltete Räume. (Entworfen um 2011 von Leah Neukirchen.)

Kontext: Verschiedene Waren sollen gegen ein Entgelt zur Verfügung gestellt werden können.

Problem: Die Waren haben unterschiedliche Preise, der Verkauf soll zudem keinen Verlust erzeugen.

Verwandte Muster:

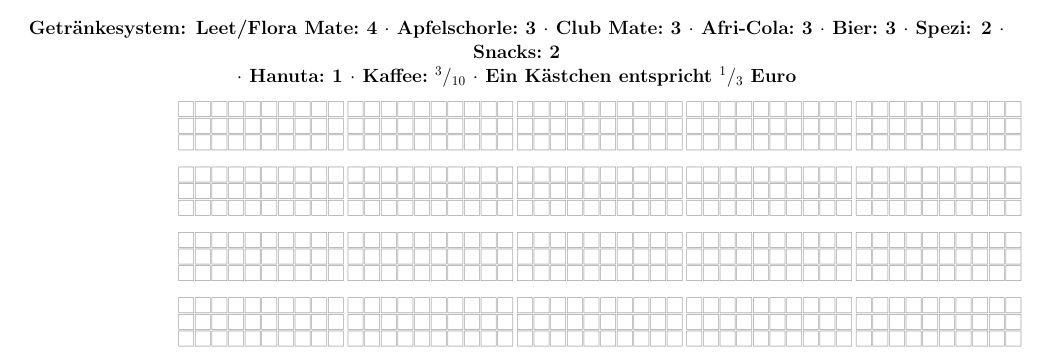

Lösung: Die Einführung eines PUNKTESYSTEMS. Dies besteht aus einem zentral angebrachten Blatt Papier auf dem jeder Nutzende eine Zeile belegt (Stammnutzer können eine Zeile in der Vorlage vorgedruckt bekommen).

Jede Zeile besteht aus z.B. drei Kästchen übereinander. Es wird ein Preis pro Kästchen spezifiziert (z.B. 33ct). Nutzende können nun in eine KASSE DES VERTRAUENS einzahlen, und die vorbezahlten Kästchen mit einem Stift markieren. Bei der Entnahme von Waren wird bezahlt, in dem man die entsprechende Anzahl Kästchen ausmalt. Dank der Vorkasse können Waren auf Vorrat eingekauft werden. Es ist darauf zu achten, dass niemand bei der Kasse Schulden macht.

Sind sehr viele Zeilen gefüllt, so wird ein neues Blatt ausgedruckt, und die geleisteten Vorzahlungen werden in neue Zeilen übertragen.

NP: Mrs. Piss—You Took Everything

You probably have seen ISO 8601 timestamps with fractional seconds, such as this one:

% date --iso-8601=ns

2023-04-20T18:45:11,094052607+02:00

However, many people don’t know ISO 8601 also allows for fractional minutes and hours!

According to the standard, these timestamps are equivalent (rounded to a second):

2023-04-20T18:45:11

2023-04-20T18:45,18333

2023-04-20T18,75305

Note that in contrast to common scientific usage, the decimal part is recommended to be separated by a comma and not a full stop, although the latter is permitted too.

However, the standard does not specify the obvious next generalization, that is, allowing fractional days. I thus propose to extend ISO 8601 in the following way, which does not change the meaning of valid existing representations:

The local time representation (after the optional time designator) may consist of only a decimal fraction, which then is interpreted as a multiple of 24 hours.

Thus, we can write the above timestamp also like this:

2023-04-20T,78137

2023-04-20,78137

Now, why would one want this? Essentially, there are three reasons:

First, it’s cute and an obvious extension of the existing format.

Second, it allows representing times of the French Republican Calendar in a natural way, which uses a decimal system as well: in this calendar, the day is divided into 10 hours of 100 minutes and 100 seconds each. Thus, the digits align directly to a decimal fraction of the whole day. The above timestamp is then (computed using fdate):

Primidi, 1 Floréal CCXXXI (231) 7:81:37

Note that we use local time here, not Paris time. If you insist on using Paris solar time, you need to offset 9 ISO minutes and 21 ISO seconds, which can be approximated as

2023-04-20T,77350+0009

Note that ISO 8601 does not allow for specifying offsets from UTC in seconds (another obvious oversight).

Finally, the mechanism also supports the use of Swatch Internet Time, a late 90s decimal time system. Here, the day is divided into 1000 beats, and the offset is fixed UTC+1 (for the Swatch headquarters in Biel):

2023-04-20T,739+0100

This is a bit more verbose than @739 but at least it’s an

international standard already!

NP: Tristan Brusch feat. Annett Louisan—Kein Problem